Quantum Information, Game Theory, and the Future of Rationality

A Critical Look at “Enhanced Fill Probability Forecasting in Bond Trading with Quantum-Based Data Transforms”

The paper does not offer anything by way of quantum advantage. However, it offers much to the emerging market around the nascent technology of quantum information processing and computing.

9/25/20254 min read

Quantum computing has long promised transformative applications in areas like optimization, machine learning, and finance. With billions earmarked worldwide for quantum R&D, through government programs, corporate budgets, and venture capital, every new paper in “quantum finance” gets attention (and with so much money flying around, hype often outpaces substance, a little bit of which is okay, as this is crucial for the growth of technology). A preprint that appeared online today — Enhanced Fill Probability Forecasting in Bond Trading with Quantum-Based Data Transforms — written by researchers at HSBC holdings and IBM, takes aim at a practical financial problem: predicting whether a bond trade order will be filled. The authors claim that inserting a quantum data transformation step into a machine-learning pipeline yields better predictive accuracy.

At first glance, this sounds like a step toward real-world quantum advantage — outperforming classical approaches on a meaningful financial task. But a closer look suggests caution: the results, while intriguing, fall short of establishing genuine quantum advantage.

What the Paper Claims

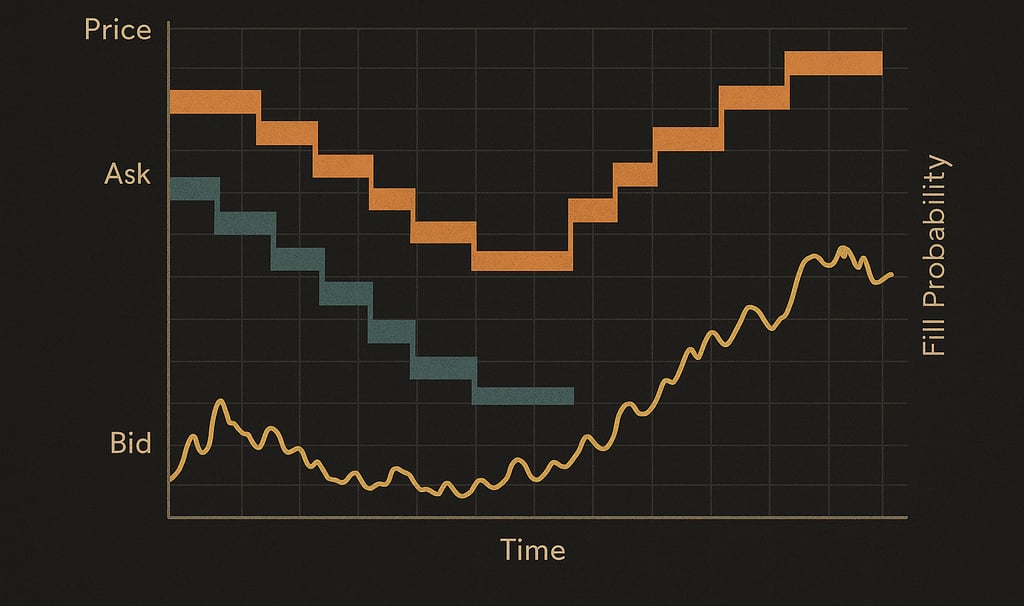

The authors design a hybrid workflow:

Start with production-scale intraday trade event data.

Apply a quantum transform to the data — running it through a quantum circuit, either on real hardware or simulated.

Feed the transformed data into classical ML models.

Compare performance against baselines (original features, or features transformed via noiseless quantum simulation).

Their headline result: an improvement of up to about 34 percent compared with some of their chosen baselines. Importantly, this is not a 34-point jump in raw accuracy, but a relative improvement — meaning that if a baseline model achieved, say, 50 percent accuracy, the hardware-transformed version might reach around 67 percent. In other words, the headline figure reflects gains against particular reference models, not an absolute leap in predictive power. More curiously, they suggest the noise in current quantum hardware might actually contribute positively. Hmmm.

Strengths of the Paper

It is important to credit what the paper does well:

Ambition and relevance. Rather than toy datasets, the authors use real trading data and a real financial prediction problem.

Hybrid approach. They don’t try to build an all-quantum pipeline, but instead plug a quantum feature transform into a classical ML pipeline. This is pragmatic and fits with how near-term quantum devices may be used.

Empirical evaluation. The study includes backtesting, giving some grounding in practice.

Unusual observation. The suggestion that hardware noise may actually improve performance is provocative and could inspire further exploration.

The Core Criticism: No Clear Quantum Advantage

The problem is not that the paper shows nothing. It is rather that the results do not amount to quantum advantage in the meaningful sense: correlations or transformations utilized that cannot be reproduced by classical information processing.

1. Quantum Advantage is Not Defined or Demonstrated

The paper reports empirical gains, but never shows that the quantum-transformed features encode correlations that are inherently quantum (i.e. impossible to simulate classically at scale). Without this, the result is: “This data transform helped our ML model,” not “This data transform is uniquely quantum.”

2. Weak Classical Baselines

The comparisons are against raw data and noiseless quantum simulations. Missing are strong classical alternatives: kernel methods, autoencoders, random projections, noise-augmented features, or nonlinear embeddings. Without these, it’s impossible to know whether a smart classical transformation could do as well or better.

3. Noise as a Feature (Not a Bug?)

If the improvement is driven by noise in hardware, then the “advantage” might come from stochasticity — something trivial to reproduce classically. One could imagine injecting structured randomness into classical features and getting similar benefits. Unless noise-based effects are ruled out, it’s premature to credit quantum mechanics.

4. Scalability is Unproven

Even if there is a modest empirical gain, does it persist at larger data scales, deeper models, or across other financial tasks? Without evidence of scaling or robustness, the impact is limited.

5. No Theoretical Hardness Argument

For a true claim of advantage, we would expect an argument that simulating the quantum transform classically is intractable. Nothing of the sort is provided. The paper is purely empirical, and thus its claims of “advantage” remain suggestive, not conclusive.

And Then There Is The Use of The Word Embedding

A special note about terminology: the authors follow the (in my opinion) ridiculous practice found in machine learning and quantum machine learning literature of using the word "embedding" for the idea of encoding or identifying classical data with quantum data. The word embedding in mathematics carries the meaning of a map that preserves structure. For example, Whitney or Nash embeddings that preserve topological or differential structures. In the QML body of literature, “embedding” has been diluted into a loose synonym for encode or map into another space.

This linguistic slippage is unfortunate. It makes the method sound deeper and more mathematically grounded than it is. A Projected Quantum Feature Map (PQFM) is essentially an encoding of classical data into quantum states, followed by measurement back to classical features. Calling this an embedding is a kind of marketing gloss — a bastardization of a word that, in mathematics, has real content.

Conclusion

The paper is an ambitious and creative attempt to bring quantum methods into a real financial application. The empirical improvements are intriguing, and the hybrid approach is pragmatic. But as it stands, this is not evidence of genuine quantum advantage. At best, the work shows that some stochastic feature transform can help in this trading task. Whether the source of that randomness is a quantum device or a classical algorithm remains an open question.

And the careless language does not help. If you cannot even use the word embedding correctly — leaning instead on a bastardized machine-learning usage — then perhaps it is not surprising that the paper falls short of demonstrating true quantum advantage. But then, the markets loved it, as evident by some positive movement of the shares of IBM, and that was surely the point.