Quantum Information, Game Theory, and the Future of Rationality

Strategic Design of Quantum Algorithms: Channeling von Neumann in Schrödinger’s Universe

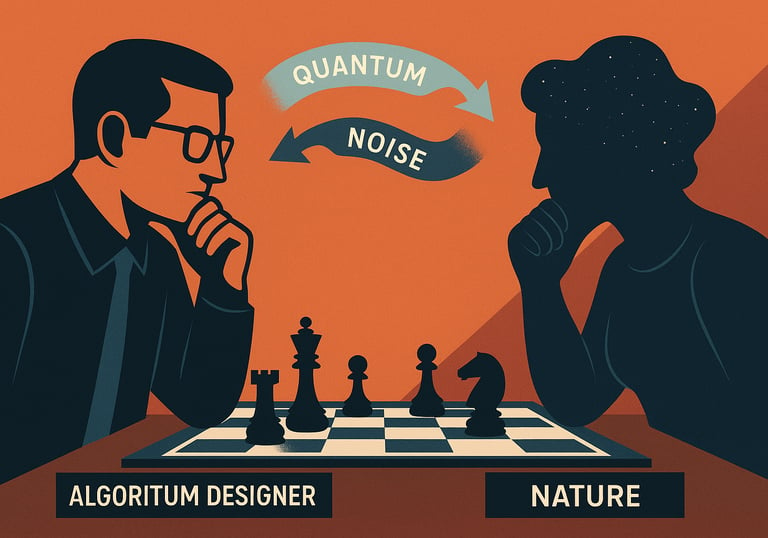

The design of quantum algorithms for near-term hardware must contend with the persistent challenge of noise and decoherence. One way to approach this problem is to frame it as a game between two entities: the algorithm designer and an adversarial environment, or "Nature," introducing the worst possible errors. This interaction lends itself to a game-theoretic perspective, where robustness and error mitigation become strategic responses to worst-case noise. Such a framework not only clarifies when an optimal algorithm is guaranteed to exist, but may also reveal efficient ways to compute it.

Faisal Shah Khan, PhD

6/9/20253 min read

When designing quantum algorithms for near-term noisy quantum architectures, one of the key challenges is anticipating how unavoidable effects such as noise and decoherence will impact performance. While this noise is typically modeled statistically or physically, we might also approach it from a different angle: treating it not only as a natural phenomenon, but as if it were the move of an opposing player in a strategic game, without abandoning its statistical and physical nature.

This is the starting point for a game-theoretic way of thinking about quantum algorithms. Imagine the algorithm designer as one player, and "Nature," representing environmental noise, as another. Each selects a strategy: the designer chooses a set of parameters that define a unitary process—effectively configuring the gates and control sequences of the algorithm to optimize performance. In response, Nature selects a noise model or a set of noise parameters that most degrade this performance, within the physical constraints of the system. Although Nature is not an intentional agent, modeling it adversarially allows us to reason about worst-case behavior in a structured and mathematical way. This shifts the focus from attempting to predict specific errors to preparing for the worst-case scenario, similar to planning for the strongest possible opponent.

Under this framing, we can apply ideas from game theory, specifically zero-sum games, to analyze the situation. The designer aims to maximize some performance metric, such as fidelity or success probability, while Nature seeks to minimize it. This setup allows us to invoke a classic result from game theory that guarantees stable outcomes, where neither side can improve its result by changing strategy unilaterally, even when the set of possible strategies is continuous and infinite, as is often the case in quantum systems. Depending on the structure of the game, this outcome is referred to as either a Nash equilibrium or, in zero-sum cases, a minimax solution.

The key result supporting this framework is Glicksberg’s generalization of the Kakutani fixed-point theorem, which ensures the existence of a Nash equilibrium in games with compact, convex strategy spaces and continuous payoffs. This is particularly relevant in quantum settings, where unitary operations and noise processes can often be parameterized over such well-behaved spaces. While real-world error models may not fully satisfy these mathematical conditions, they can frequently be approximated or bounded in ways that do. This makes Glicksberg’s theorem not only a theoretical foundation, but also a practical guide: it suggests how one might structure the space of allowable quantum strategies and noise behaviors to keep the analysis both realistic and tractable. In this way, game theory provides a rigorous yet flexible starting point for thinking about quantum algorithm design under realistic physical constraints.

From the designer’s point of view, this minimax formulation offers more than conceptual clarity. It defines what the optimal quantum algorithm should be under worst-case conditions. By assuming that Nature will select the most damaging noise process allowed by the physical model—such as a specific decoherence channel or a stochastic perturbation of the unitary gates—the designer can construct a strategy that performs as well as possible even in the most adversarial scenario. In other words, the solution to this game corresponds to the algorithm that guarantees the best achievable performance against the worst possible noise. It is not only effective, but robust by design.

A natural next question is whether this equilibrium can be computed. In many cases, the answer is yes. If the strategy spaces—such as the parameter sets defining unitaries and noise channels—are convex, and the payoff function (such as fidelity or success probability) is linear or convex-concave, then the equilibrium can be found by solving a type of optimization problem known as a semidefinite program. These programs can be solved efficiently, which means this framework does more than ensure the existence of an optimal strategy; it also provides a tractable method for finding it.

This approach may also offer insights into quantum error mitigation. Typically, error mitigation involves heuristics or circuit-level adjustments that reduce the impact of noise without achieving full error correction. However, if one models mitigation strategies as moves in a game against an adversarial noise source, it may be possible to formalize robust strategies—countermeasures that perform well across a wide range of potential errors—using similar game-theoretic tools. This could help identify mitigation protocols that are not only empirically effective, but provably optimal under worst-case assumptions.

This perspective provides a principled method for designing quantum algorithms that are robust against the most damaging forms of noise, rather than simply optimized for average-case performance. It also brings together ideas from quantum computing, decision theory, and optimization in a coherent and productive way. Whether one is "gaming the quantum," by directly manipulating quantum systems, or "quantizing the game," by using quantum tools to enhance strategic outcomes, these models offer a formal structure for preparing intelligently for the inherent uncertainty of quantum computation.